AI in Higher Education: Transforming Learning, Equity, and Academic Integrity

Input

Modified

The Rise of AI in Universities: Trends and Student Adoption Challenges of AI: Digital Inequality, Ethics, and Institutional Responses The Path Forward: How Universities Must Adapt to AI Integration

The Rise of AI in Universities: Trends and Student Adoption

The pervasive adoption of AI in higher education is transforming student learning, with 92% of students currently utilizing AI tools. However, challenges persist in the areas of academic integrity, ethical use, and accessibility disparities. Universities must address concerns about fairness and digital inequality by adapting assessment methods, improving AI literacy, and establishing explicit policies to ensure that students are prepared for an AI-driven future.

Higher education is being rapidly transformed by artificial intelligence (AI), which is profoundly changing the way students learn, research, and complete their schoolwork. The Higher Education Policy Institute (HEPI) in partnership with Kortext conducted the Student Generative AI Survey 2025, which demonstrates a substantial rise in the adoption of AI among university students in the United Kingdom. The survey indicates that 92% of pupils currently employ AI in some capacity, a significant increase from 66% in 2024. Moreover, the percentage of pupils who have implemented AI in their assessments has increased from 53% the previous year to 88%. These statistics suggest that AI is no longer an optional instrument, but rather a fundamental element of higher education.

Although students acknowledge the advantages of AI in improving productivity, they continue to harbor apprehensions regarding academic integrity, bias, and digital inequality. Universities are currently confronted with the task of redefining assessment methods, enhancing AI literacy, and developing ethical, transparent policies to guarantee responsible AI utilization. This article delves into the primary findings of the HEPI report, the opportunities and challenges that AI presents, and the essential measures that institutions must implement to adjust to this new academic environment.

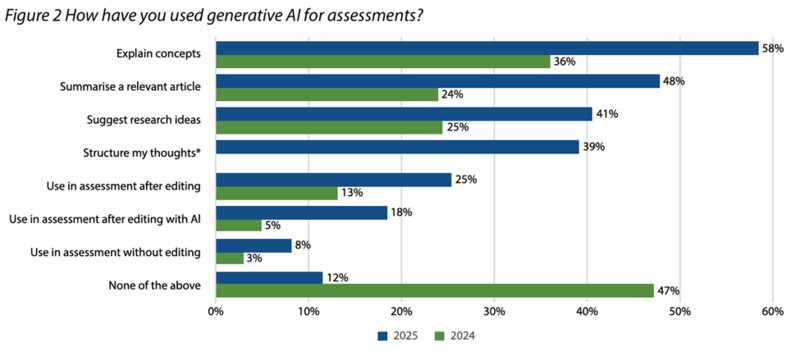

The report highlights the various ways students utilize AI, with the primary functions being:

1. Explaining Concepts – 58% of students use AI for this purpose, up from 36% in 2024.

2. Summarizing Articles – The largest increase in AI usage was observed in this category.

3. Generating Research Ideas – Students rely on AI for brainstorming and topic refinement.

4. Drafting Assignments – 18% of students directly incorporate AI-generated text into their work.

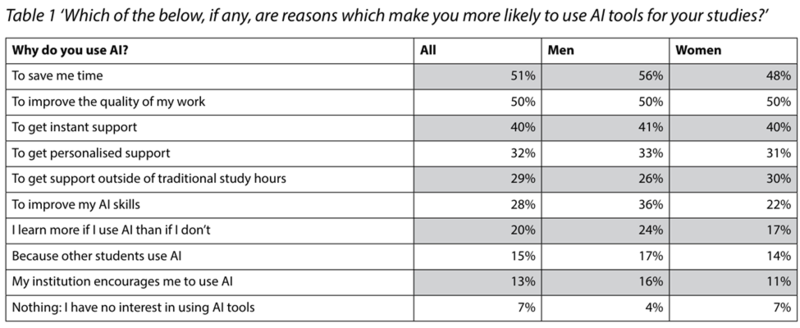

The primary motivations for using AI include saving time (51%), improving work quality (50%), and obtaining instant support (40%). The study also found that students from STEM disciplines and wealthier backgrounds are more likely to utilize AI tools compared to those studying humanities or from lower-income backgrounds. This growing digital divide raises concerns about equity and access to educational resources.

Challenges of AI: Digital Inequality, Ethics, and Institutional Responses

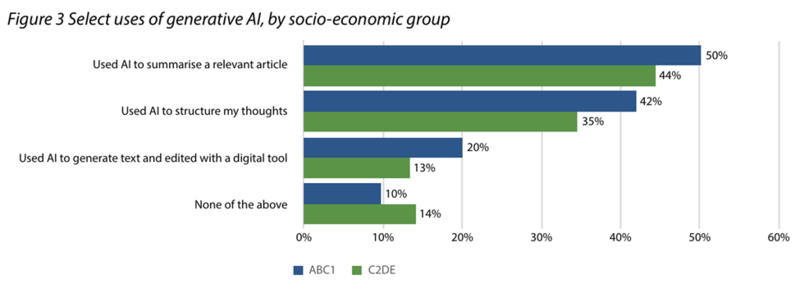

One of the report’s most concerning findings is the expanding digital divide in AI accessibility. Wealthier students have greater access to premium AI tools and subscriptions, leading to an unequal academic playing field. The report found that 50% of students from privileged backgrounds use AI to summarize articles, while only 44% of students from lower-income backgrounds do the same.

If AI literacy and accessibility are not addressed, students from disadvantaged backgrounds risk falling behind their peers, further widening existing educational inequalities. To combat this, universities must ensure AI resources are available to all students, providing institutional AI tools and structured AI training.

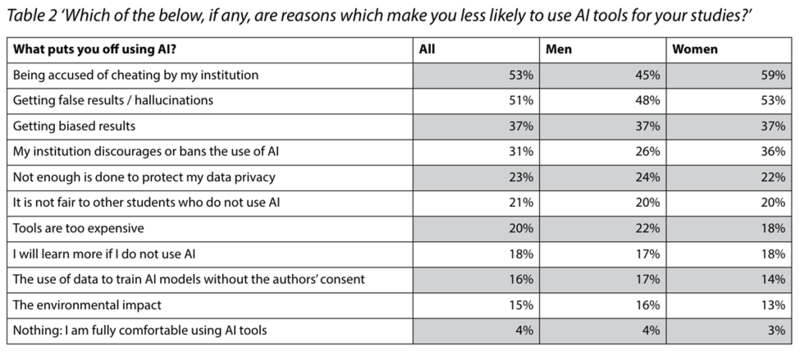

The rise of AI in academia has led to increasing concerns over academic integrity. Some universities compare AI-assisted work to using a calculator in mathematics, while others classify it as potential misconduct. According to the report:

- 18% of students admitted to directly integrating AI-generated content into their assignments.

- 76% believe their institution can detect AI-generated work, but detection reliability remains uncertain.

- 53% of students worry about being accused of cheating.

Dr. Thomas Lancaster, a computer scientist specializing in academic integrity at Imperial College London, warns that students who do not use AI will soon become a minority. With AI tools becoming essential in both education and the workforce, blanket bans on AI use are neither realistic nor productive.

Instead, institutions must develop clear and flexible AI policies, ensuring that students understand ethical AI usage while fostering critical thinking skills.

Institutional Response: Are Universities Keeping Up?

Despite the widespread use of AI, many universities remain ill-prepared to address its challenges:

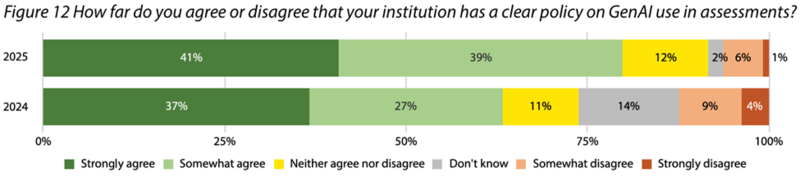

- 80% of students believe their institution has a clear AI policy, yet many report confusion over AI regulations.

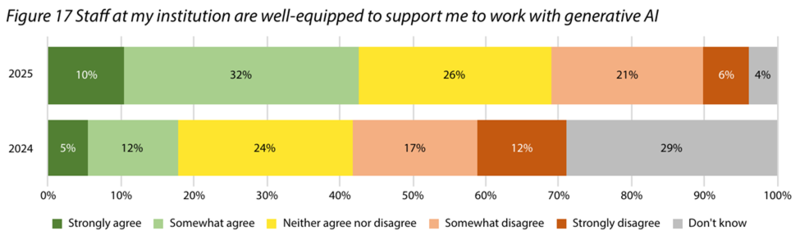

- Staff literacy in AI has improved, with 42% of students saying their lecturers are now better equipped to help with AI, up from 18% in 2024.

The Path Forward: How Universities Must Adapt to AI Integration

Universities are struggling with contradictory policies, with some institutions outright banning AI-generated content while others encourage responsible use but provide minimal guidance.

Moving forward, universities must shift their focus from punitive measures to AI literacy initiatives, ensuring that both students and staff can effectively navigate AI tools in academic settings.

What Needs to Change? Recommendations for Universities

The HEPI report outlines key recommendations to help universities adapt to AI’s rapid integration into education:

1.) Revise Assessment Methods – Universities should update assessment formats to emphasize critical thinking and problem-solving, reducing reliance on AI-generated responses.

2.) Provide Structured AI Training – Both students and faculty should receive formal AI literacy training to bridge the knowledge gap.

3.) Develop Clear AI Policies – Institutions should create transparent and consistent AI policies, eliminating contradictions and confusion.

4.) Ensure Equal Access to AI Tools – Universities should provide institutional AI resources, such as free access to ChatGPT, Grammarly, and Microsoft Copilot.

5.) Encourage Collaboration Among Institutions – Universities should share best practices and policies, working together to integrate AI effectively and ethically.

Clearly, the Student Generative AI Survey 2025 illustrates the following: Universities must promptly adjust to the fact that AI is now an essential component of higher education, or they risk being left behind. Universities must confront ethical concerns, the digital divide, and academic integrity challenges, despite the fact that AI offers substantial opportunities to enhance learning.

Students will be at a disadvantage in their academic pursuits and their future careers if AI is not correctly integrated. Universities must act immediately to transition from restrictive AI policies to proactive strategies that promote ethical AI usage, ensure equitable access, and enhance AI literacy.

Institutions must embrace this transformation to ensure that students are prepared for the AI-powered workforce of tomorrow, as the future of education is undeniably AI-driven.